steve_bank

Diabetic retinopathy and poor eyesight. Typos ...

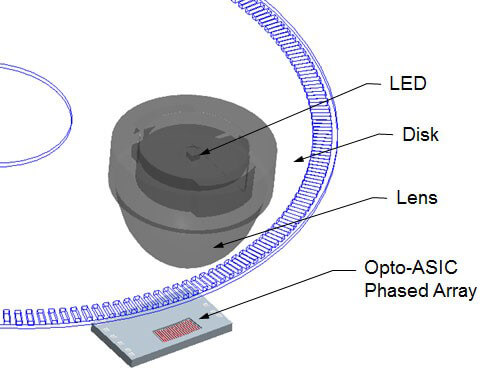

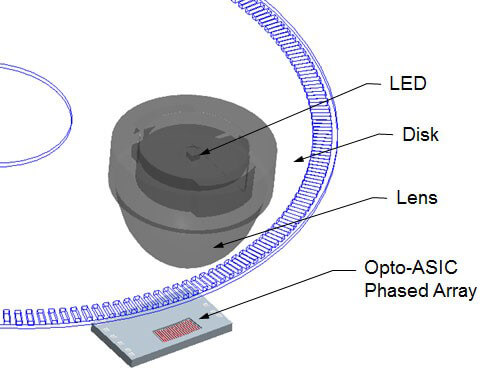

A Gray Code is a binary code that changes only 1 bit at a time. One modern usage is in rotary postilion encoders. Shining light through holes in a disk with straight binary is problematic, had to unambiguously determine multiple simultaneous bit changes.

A simple math technique with wide usage.

en.wikipedia.org

en.wikipedia.org

www.dynapar.com

www.dynapar.com

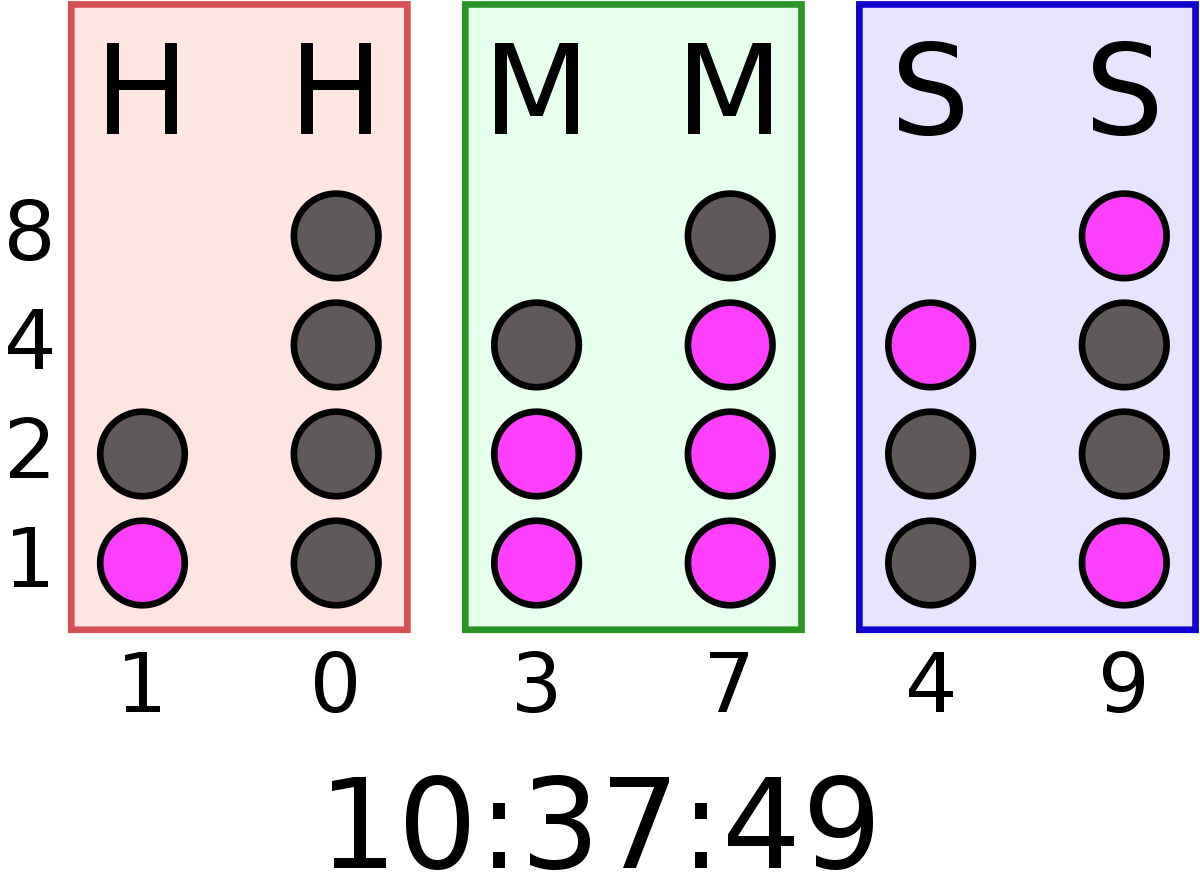

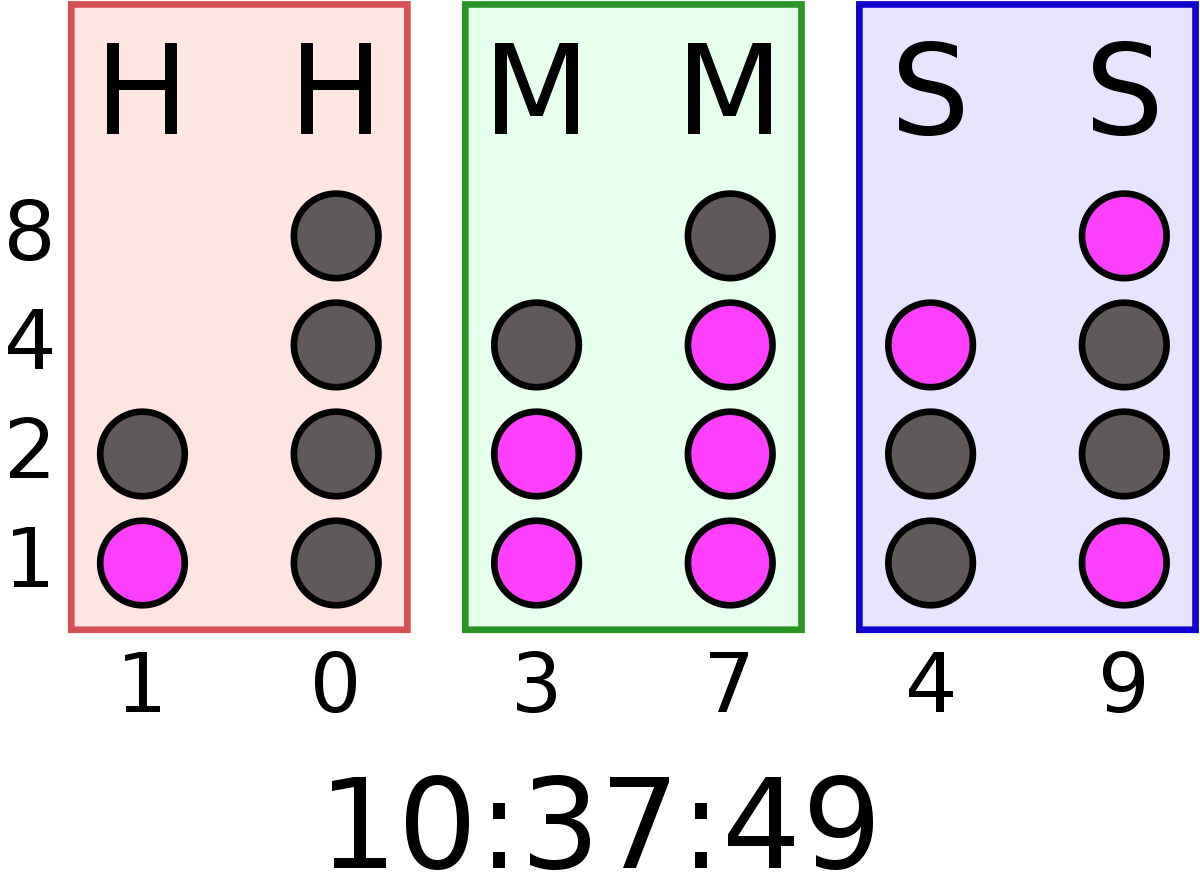

BCD Binary Coded Decimal

You can do arithmetic in BCD . There were early processors that implemented BCD.

en.wikipedia.org

en.wikipedia.org

en.wikipedia.org

en.wikipedia.org

A simple math technique with wide usage.

Gray code - Wikipedia

The reflected binary code (RBC), also known as reflected binary (RB) or Gray code after Frank Gray, is an ordering of the binary numeral system such that two successive values differ in only one bit (binary digit).

For example, the representation of the decimal value "1" in binary would normally be "001" and "2" would be "010". In Gray code, these values are represented as "001" and "011". That way, incrementing a value from 1 to 2 requires only one bit to change, instead of two.

Gray codes are widely used to prevent spurious output from electromechanical switches and to facilitate error correction in digital communications such as digital terrestrial television and some cable TV systems. The use of Gray code in these devices helps simplify logic operations and reduce errors in practice.[3]

Optical Encoders: Magnetic vs Optical Encoder Engines | Dynapar

Explore cutting-edge optical encoders at Dynapar: precision technology for advanced motion control. Click to learn more!

BCD Binary Coded Decimal

You can do arithmetic in BCD . There were early processors that implemented BCD.

Binary-coded decimal - Wikipedia

In computing and electronic systems, binary-coded decimal (BCD) is a class of binary encodings of decimal numbers where each digit is represented by a fixed number of bits, usually four or eight. Sometimes, special bit patterns are used for a sign or other indications (e.g. error or overflow).

In byte-oriented systems (i.e. most modern computers), the term unpacked BCD[1] usually implies a full byte for each digit (often including a sign), whereas packed BCD typically encodes two digits within a single byte by taking advantage of the fact that four bits are enough to represent the range 0 to 9. The precise four-bit encoding, however, may vary for technical reasons (e.g. Excess-3).

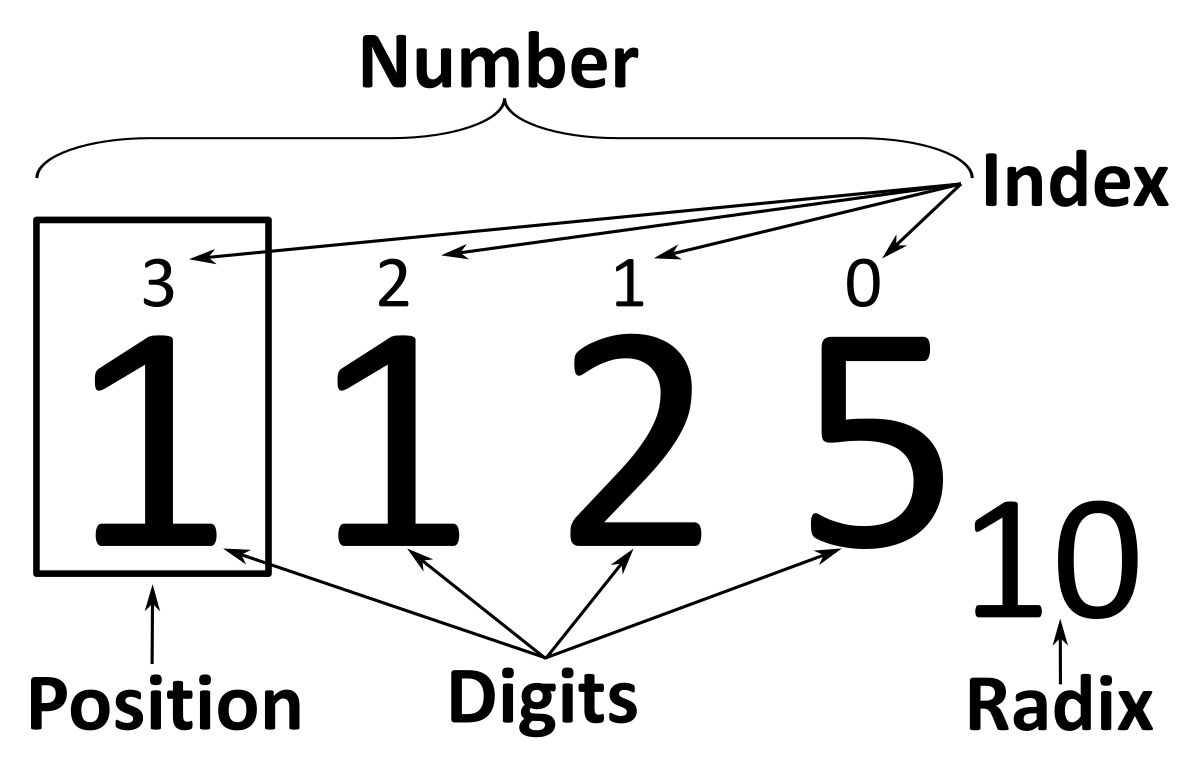

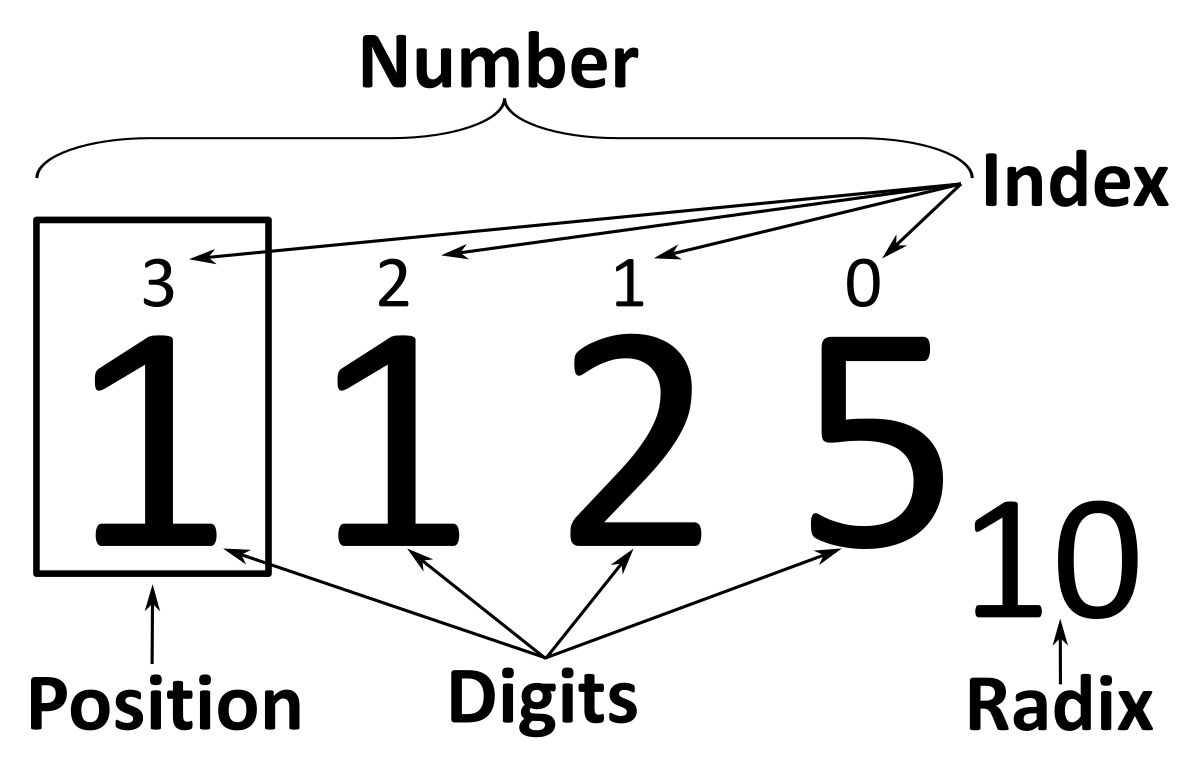

Positional notation - Wikipedia

Positional notation (or place-value notation, or positional numeral system) usually denotes the extension to any base of the Hindu–Arabic numeral system (or decimal system). More generally, a positional system is a numeral system in which the contribution of a digit to the value of a number is the value of the digit multiplied by a factor determined by the position of the digit. In early numeral systems, such as Roman numerals, a digit has only one value: I means one, X means ten and C a hundred (however, the value may be negated if placed before another digit). In modern positional systems, such as the decimal system, the position of the digit means that its value must be multiplied by some value: in 555, the three identical symbols represent five hundreds, five tens, and five units, respectively, due to their different positions in the digit string.