steve_bank

Diabetic retinopathy and poor eyesight. Typos ...

Transforms.pdf (uncw.edu)

lecture8 (stanford.edu)

Convolution -- from Wolfram MathWorld

As applied mathematics the Fourier Transform is ubiquitous., especially in engineering. Beyond straightforward textbook examples it is applied digitally. Fourier showed that any real function can be decomposed into a sine-cosine series. The basic algorithm is straightforward, a series summation. It involves a lot of multiplications and in the early days of PCs the FT was used as a speed benchmark.

Back then people coded it in GWBASIC. The straight solution is the Discrete Fourier Transform, or DFT. The Fast Fourier Transform or FFT is an optimized solution. You can look at the links and other info on the net.

The Fourier Transform has wide applications and is foundational. Fourier showed that any real function can be decomposed into a sine.-cosine series. Outside of simple textbook problems it is not practical to apply the transform by performing the integral. It is done digitally.

The Discrete Fourier Transform(DFT) was the original algorithm. It involves a lot of multiplications. On early computers it was agonizingly slow. On my first PC without a math coprocessor a 1024 point transform could take a while. The Fast Fourier Transform(FFT) is an optimization of the DFT.

You can look at the links and there are C code implementations on the net. There is no need to clutter the thread with equations. The coding is straightforward. Way back people did it in GWBAsic.

The script is in Scilab but it can be ported to other tools. Excel may have an FFT in an add on. There are numerous C code implementations on the net. Scilab is free if you want to experiment. Scilab can import audio files for speech processing.

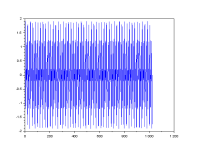

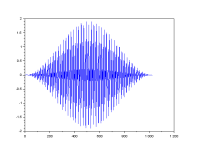

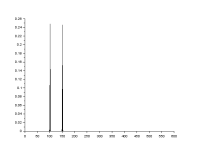

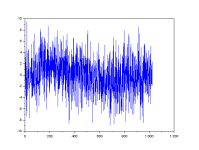

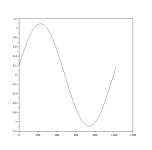

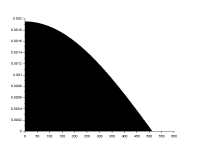

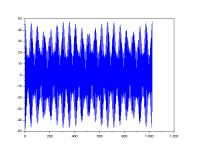

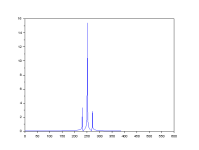

The code generates sine waves and pulses. The spectral analysis of pulses has significance in digital electronics and communications.

To add noise to the sines make the mean and std variables non zero. 0 0 means no noise.

A combination of 2 sines can be created. For a single sine make one amplitude zero.

The pulse function will place a pulse anywhere in the record.

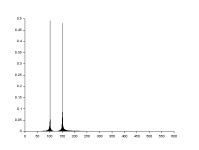

The spectrum from the transform is symmetric, two mirror halves. This means for a sine amplitude of 1 the line amplitude is 0.5.

I did not add phase. To find the phase of a line take the arctan of imag/real of the transform, and determine the quadrant from the imag and real signs.

lecture8 (stanford.edu)

Convolution -- from Wolfram MathWorld

As applied mathematics the Fourier Transform is ubiquitous., especially in engineering. Beyond straightforward textbook examples it is applied digitally. Fourier showed that any real function can be decomposed into a sine-cosine series. The basic algorithm is straightforward, a series summation. It involves a lot of multiplications and in the early days of PCs the FT was used as a speed benchmark.

Back then people coded it in GWBASIC. The straight solution is the Discrete Fourier Transform, or DFT. The Fast Fourier Transform or FFT is an optimized solution. You can look at the links and other info on the net.

The Fourier Transform has wide applications and is foundational. Fourier showed that any real function can be decomposed into a sine.-cosine series. Outside of simple textbook problems it is not practical to apply the transform by performing the integral. It is done digitally.

The Discrete Fourier Transform(DFT) was the original algorithm. It involves a lot of multiplications. On early computers it was agonizingly slow. On my first PC without a math coprocessor a 1024 point transform could take a while. The Fast Fourier Transform(FFT) is an optimization of the DFT.

You can look at the links and there are C code implementations on the net. There is no need to clutter the thread with equations. The coding is straightforward. Way back people did it in GWBAsic.

The script is in Scilab but it can be ported to other tools. Excel may have an FFT in an add on. There are numerous C code implementations on the net. Scilab is free if you want to experiment. Scilab can import audio files for speech processing.

The code generates sine waves and pulses. The spectral analysis of pulses has significance in digital electronics and communications.

To add noise to the sines make the mean and std variables non zero. 0 0 means no noise.

A combination of 2 sines can be created. For a single sine make one amplitude zero.

The pulse function will place a pulse anywhere in the record.

The spectrum from the transform is symmetric, two mirror halves. This means for a sine amplitude of 1 the line amplitude is 0.5.

I did not add phase. To find the phase of a line take the arctan of imag/real of the transform, and determine the quadrant from the imag and real signs.