lpetrich

Contributor

When Isaac Newton and Gottfried Wilhelm Leibniz developed the calculus, they defined differential calculus with "infinitesimals", nonzero numbers that are closer to zero than any other nonzero number. That seemed nonsensical to some people, and in the early 19th century, mathematicians developed the concept of limits, making infinitesimals unnecessary.

Infinitesimals are well-defined in something called "nonstandard analysis", however. They can be defined as some number x times an infinitesimal unit e, where e2 = 0.

Here is the limit L of a function f(x) as x goes to a.

For some value eps, there is some value del such that for every x satisfying |x - a| < del, |f(x) - L| < eps.

This can be extended to infinite limits and limits of infinte series, and one can also do one-sided limits, limits from x > a or x < a.

For a positive infinite limit at some point:

For some value eps, there is some value del such that for every x satisfying |x - a| < del, f(x) > eps.

One can also do negative infinity, f(x) < eps, and both-directions and complex infinity, |f(x)| > eps.

For the limit for tending to positive infinity:

For some value eps, there is some value del such that for every x > del, |f(x) - L| < eps.

One can also do negative infinity, x < del, and both-directions and complex infinity, |x| > del.

One can combine these two types of infinite limits to find an infinite limit for tending to infinity.

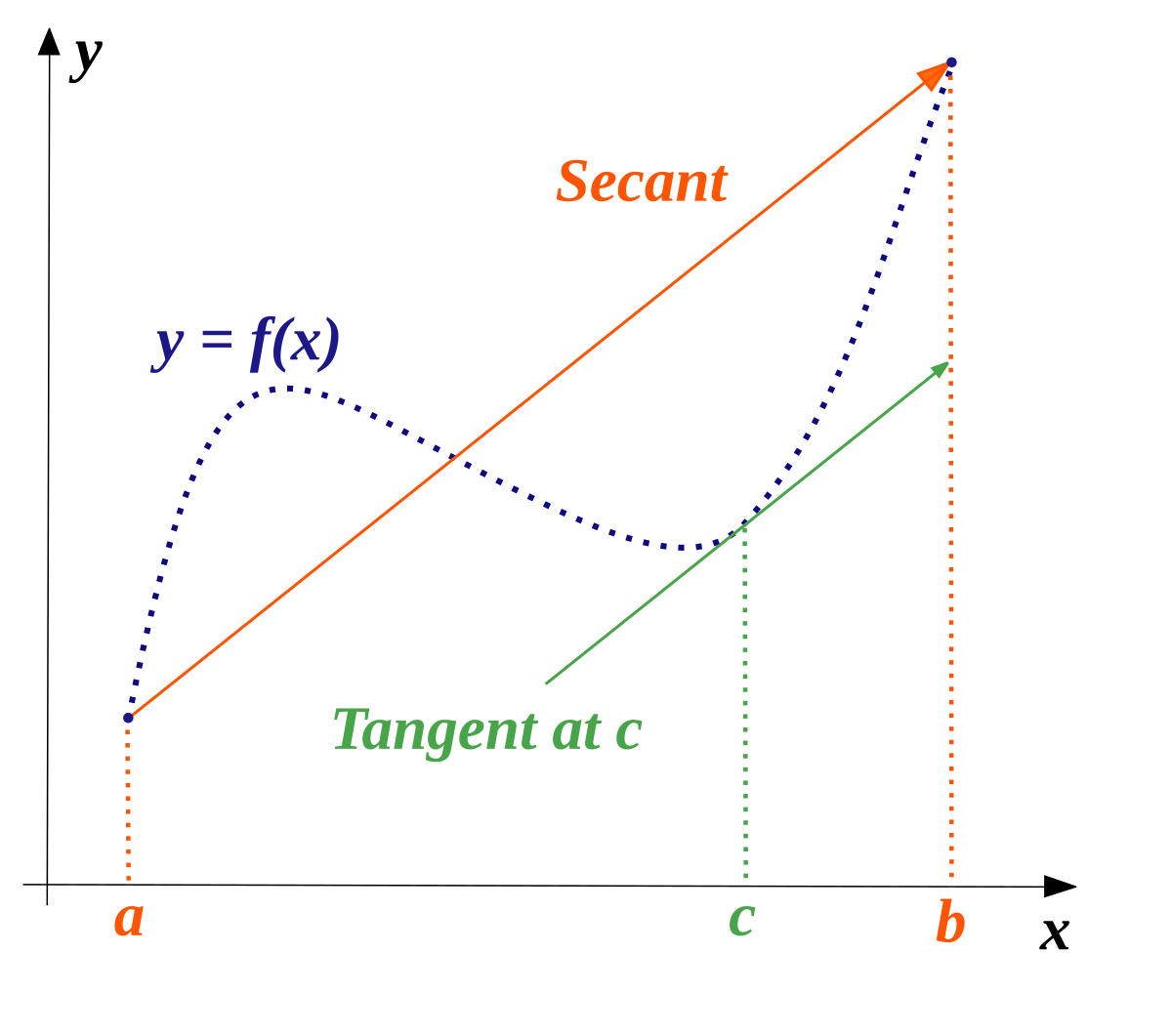

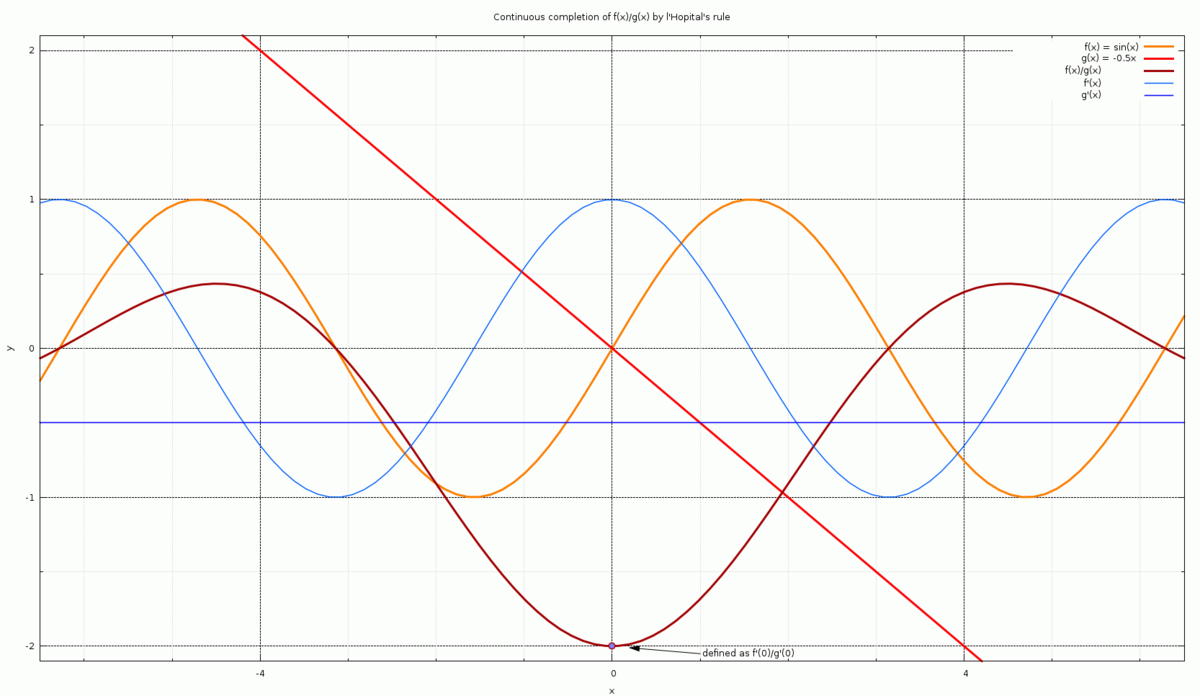

Thus, one can define taking a derivative with a limit:

f'(x) = limit of (f(x+h) - f(x))/h as h tends to 0

instead of making h an infinitesimal.

Infinitesimals are well-defined in something called "nonstandard analysis", however. They can be defined as some number x times an infinitesimal unit e, where e2 = 0.

Here is the limit L of a function f(x) as x goes to a.

For some value eps, there is some value del such that for every x satisfying |x - a| < del, |f(x) - L| < eps.

This can be extended to infinite limits and limits of infinte series, and one can also do one-sided limits, limits from x > a or x < a.

For a positive infinite limit at some point:

For some value eps, there is some value del such that for every x satisfying |x - a| < del, f(x) > eps.

One can also do negative infinity, f(x) < eps, and both-directions and complex infinity, |f(x)| > eps.

For the limit for tending to positive infinity:

For some value eps, there is some value del such that for every x > del, |f(x) - L| < eps.

One can also do negative infinity, x < del, and both-directions and complex infinity, |x| > del.

One can combine these two types of infinite limits to find an infinite limit for tending to infinity.

Thus, one can define taking a derivative with a limit:

f'(x) = limit of (f(x+h) - f(x))/h as h tends to 0

instead of making h an infinitesimal.