steve_bank

Diabetic retinopathy and poor eyesight. Typos ...

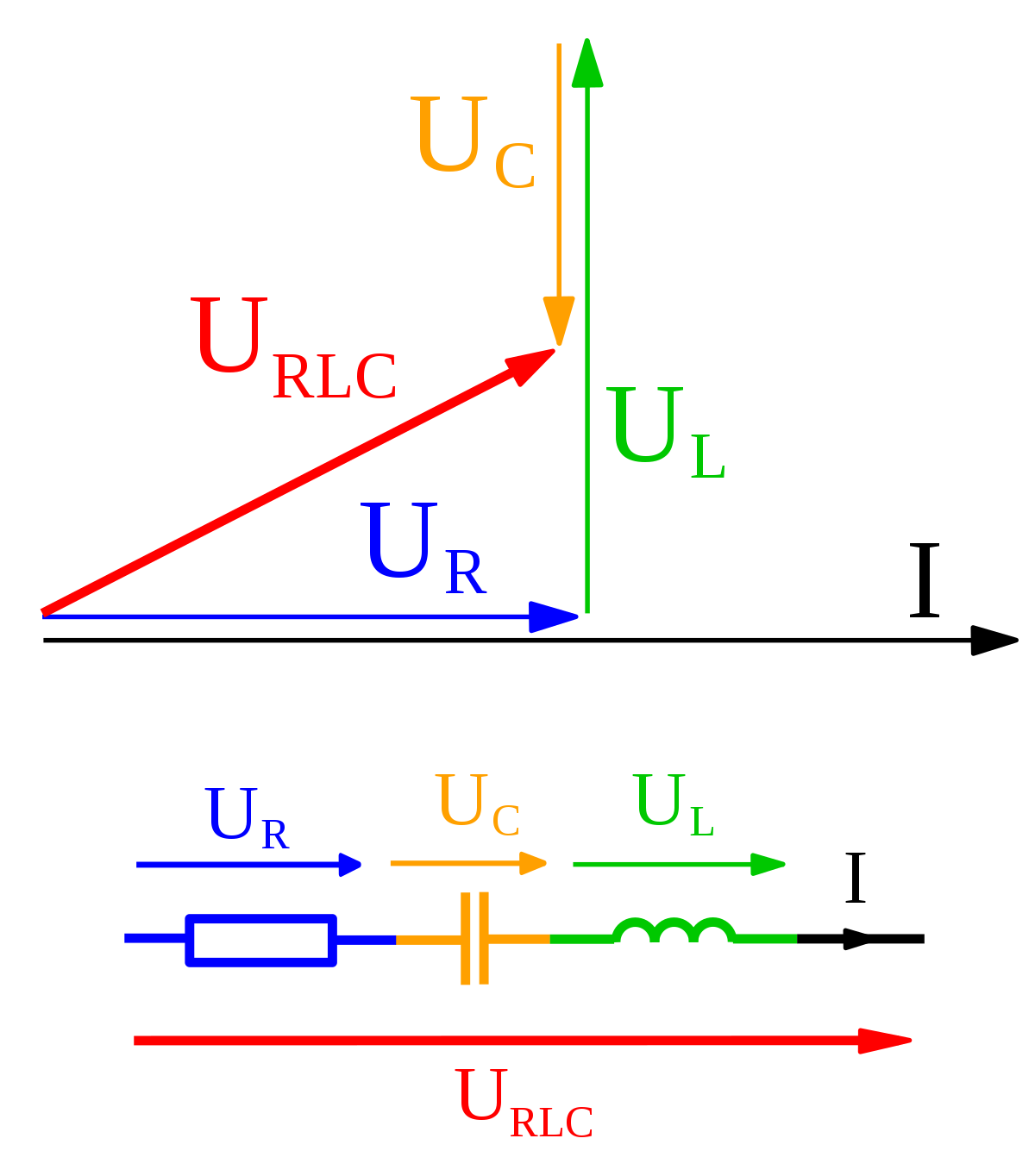

Complex numbers

Magnitude = sqrt(real^2 + imaginary^2).

Phase = atan(imaginary.real).

Magnitude = sqrt(real^2 + imaginary^2).

Phase = atan(imaginary.real).

Code:

import math

import cmath

R2D = 360/(math.pi*2) # radians to degrees

y = 0 + 1j

print('%.5f \t%.5f' %(abs(y),cmath.phase(y)*R2D))

y = 0 - 1j

print('%.5f \t%.5f' %(abs(y),cmath.phase(y)*R2D))

y = 1 + 1j

print('%.5f \t%.5f' %(abs(y),cmath.phase(y)*R2D))

y = 1 - 1j

print('%.5f \t%.5f' %(abs(y),cmath.phase(y)*R2D))

1.00000 90.00000

1.00000 -90.00000

1.41421 45.00000

1.41421 -45.00000

Arrays of complex numbers

n = 10

x = n*[0. + 0.j] # create complex array

ph = n*[0]

m = n*[0]

for i in range(n):

x[i] = i + 2*i*1j

m[i] = abs(x[i])

ph[i] = cmath.phase(x[i])*R2D

for i in range(n):

print('%10.5f \t%10.5fi \t%10.5f \t%10.5f ' %(x[i].real,x[i].imag,m[i],ph[i]))