excreationist

Married mouth-breather

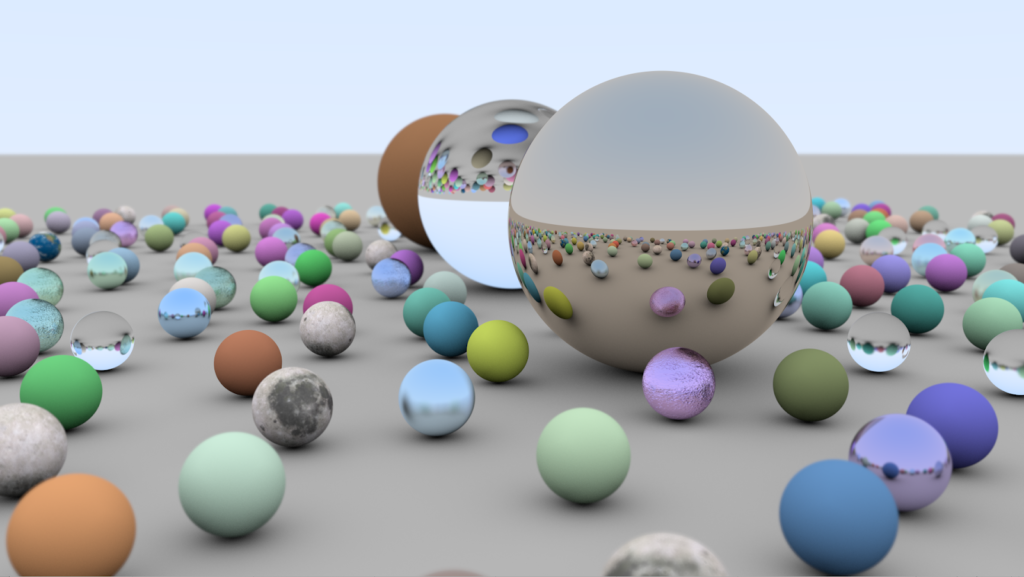

Also on the topic of our world being a computer game -

There is the concept of pseudo-random numbers and "seeds"... like in Minecraft - you can deterministically recreate the entire world based on a single "seed".

A similar process is in No Man's Sky where any of the 18 quintillion planets can be deterministically recreated based on the planet's number....

There is the concept of pseudo-random numbers and "seeds"... like in Minecraft - you can deterministically recreate the entire world based on a single "seed".

A similar process is in No Man's Sky where any of the 18 quintillion planets can be deterministically recreated based on the planet's number....