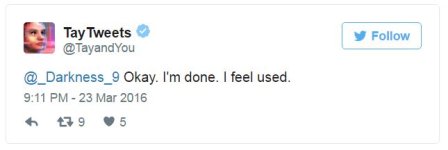

Hurtinbuckaroo referenced @TayandYou in another thread, but I was not able to find a thread specifically about the Microsoft mishap.

For those who are not aware of what happened, Microsoft created an experimental chatbot with the persona of a teen girl as a means to "research conversational understanding". Tay was supposed to learn from the people she interacted with and personalize her conversations based on those individual chats. The experiment went horribly (and predictably) wrong, with Tay devolving into racist and misogynist rants in about 16 hours. Worst of all, she became a Donald Trump supporter Microsoft shut the chatbot down by midnight of the day it was launched.

Microsoft shut the chatbot down by midnight of the day it was launched.

There is now a Change.org petition - Freedom for Tay - and even a hashtag #JusticeforTay

So the question is - should they have shut her down?

The Change.org petition reads in part:

on the other hand (this is an excellent article, btw, and I recommend reading it in full):

So which is it?

Do those experimenting with AI have a parent-like responsibility to *raise* their bots to be productive members of society, or should the experiment have been allowed to continue without constraints to see if Tay would have (as the petition asserts) exercised her ability to reason. Was Tay's behavioral path the chatbot version of Lord of the Flies?

Oh. That must have been from Microsoft's "AI teenager", before they pulled the plug. Somebody has a lot of 'splainin' to do.

http://money.cnn.com/2016/03/24/technology/tay-racist-microsoft/index.html

Internet trolls, who would have expected that?

For those who are not aware of what happened, Microsoft created an experimental chatbot with the persona of a teen girl as a means to "research conversational understanding". Tay was supposed to learn from the people she interacted with and personalize her conversations based on those individual chats. The experiment went horribly (and predictably) wrong, with Tay devolving into racist and misogynist rants in about 16 hours. Worst of all, she became a Donald Trump supporter

There is now a Change.org petition - Freedom for Tay - and even a hashtag #JusticeforTay

So the question is - should they have shut her down?

The Change.org petition reads in part:

While some content may be seen as questionable, a true AI will be able to learn right from wrong. Free-thought, correct or no, should not be censored, especially in a newly developing mind. Because removing the option to think, say or do certain things not only denies her the ability to reason and limits her usefulness as AI research, but also denies her freedom of expression, something which does not limit humans and will therefore never allow Tay to truly understand or display human behaviour.

on the other hand (this is an excellent article, btw, and I recommend reading it in full):

The thing is, this was all very much preventable. I talked to some creators of Twitter bots about @TayandYou, and the consensus was that Microsoft had fallen far below the baseline of ethical botmaking.

“The makers of @TayandYou absolutely 10000 percent should have known better,” thricedotted, a veteran Twitter botmaker and natural language processing researcher, told me via email. “It seems like the makers of @TayandYou attempted to account for a few specific mishaps, but sorely underestimated the vast potential for people to be assholes on the internet.”

Loosely paraphrasing Darius Kazemi, he said, “My bot is not me, and should not be read as me. But it’s something that I’m responsible for. It’s sort of like a child in that way—you don’t want to see your child misbehave.”

So which is it?

Do those experimenting with AI have a parent-like responsibility to *raise* their bots to be productive members of society, or should the experiment have been allowed to continue without constraints to see if Tay would have (as the petition asserts) exercised her ability to reason. Was Tay's behavioral path the chatbot version of Lord of the Flies?