-

Features

-

Friends of IIDBFriends Recovering from Religion United Coalition of Reason Infidel Guy

Forums Council of Ex-Muslims Rational Skepticism

Social Networks Internet Infidels Facebook Page IIDB Facebook Group

IIDB Archives FRDB Archive Secular Café Archive

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Taylor Swifts 34th Birthday

- Thread starter SLD

- Start date

Elixir

Made in America

42In honor of Taylor Swift’s birthday here are 34 Taylor series. How swiftly do they converge?

View attachment 44923

thebeave

Veteran Member

What a coincidence. That's the same number of boyfriends that TS has had. I may yet be a believer in numerology.42In honor of Taylor Swift’s birthday here are 34 Taylor series. How swiftly do they converge?

View attachment 44923

Politesse

Lux Aeterna

- Joined

- Feb 27, 2018

- Messages

- 12,212

- Location

- Chochenyo Territory, US

- Gender

- nonbinary

- Basic Beliefs

- Jedi Wayseeker

That's funny, I was just thinking about how the Taylorian work model leads to swift worker turnover.

ideologyhunter

Contributor

Damn, she invented polynomials??? She really is Person of the Year. I apologize.

SLD

Contributor

No just the Taylor series.Damn, she invented polynomials??? She really is Person of the Year. I apologize.

lpetrich

Contributor

I'll look at these series. The numbering is (row number) (column number: a,b)

1a. exp(x*log(a))

1b. sqrt(1+x)

2a. 2 * sqrt(x) * arctan(sqrt(x))

2b. 1/(1+x)^3

3a. - log(1+x)

3b. 1/sqrt(1+x)

4a. sin(x)

4b. sinh(x)

5a. sec(x)

5b. 1/sqrt(1+x^2)

6a. coth(x) -- typo in last term?

6b. (1+x)^r

7a. csc(x)

7b. tan(x)

8a. arcsin(x)

8b. sech(x)

9a. 1/((x-1)+1) = 1/x

9b. 1/(1+x^2)

10a. cot(x) -- typos in two of the terms?

10b. 1/(1-x)^2

11a. arctan(x)

11b. csch(x)

12a. sqrt(1+x^2)

12b. pi/2 - arctan(x) = arccot(x)

13a. ?

13b. ?

14a. log(1+x)

14b. arctanh(x)

15a. pi/2 - arcsin(x) = arccos(x)

15b. arcsinh(x)

16a. - pi/2 - arctan(1/x) = - pi/2 + arccot(x) = - pi - arctan(x)

16b. tan(x)

17a. cos(x)

17b. exp(x)

I did all of them but those in row 13.

Taylor Series Expansions of Trigonometric Functions

Special Numbers for Bernoulli and Euler numbers, needed for tangent, cotangent, secant, cosecant, and their hyperbolic variants.

1a. exp(x*log(a))

1b. sqrt(1+x)

2a. 2 * sqrt(x) * arctan(sqrt(x))

2b. 1/(1+x)^3

3a. - log(1+x)

3b. 1/sqrt(1+x)

4a. sin(x)

4b. sinh(x)

5a. sec(x)

5b. 1/sqrt(1+x^2)

6a. coth(x) -- typo in last term?

6b. (1+x)^r

7a. csc(x)

7b. tan(x)

8a. arcsin(x)

8b. sech(x)

9a. 1/((x-1)+1) = 1/x

9b. 1/(1+x^2)

10a. cot(x) -- typos in two of the terms?

10b. 1/(1-x)^2

11a. arctan(x)

11b. csch(x)

12a. sqrt(1+x^2)

12b. pi/2 - arctan(x) = arccot(x)

13a. ?

13b. ?

14a. log(1+x)

14b. arctanh(x)

15a. pi/2 - arcsin(x) = arccos(x)

15b. arcsinh(x)

16a. - pi/2 - arctan(1/x) = - pi/2 + arccot(x) = - pi - arctan(x)

16b. tan(x)

17a. cos(x)

17b. exp(x)

I did all of them but those in row 13.

Taylor Series Expansions of Trigonometric Functions

Special Numbers for Bernoulli and Euler numbers, needed for tangent, cotangent, secant, cosecant, and their hyperbolic variants.

SLD

Contributor

Very good! But how swiftly do they converge?I'll look at these series. The numbering is (row number) (column number: a,b)

1a. exp(x*log(a))

1b. sqrt(1+x)

2a. 2 * sqrt(x) * arctan(sqrt(x))

2b. 1/(1+x)^3

3a. - log(1+x)

3b. 1/sqrt(1+x)

4a. sin(x)

4b. sinh(x)

5a. sec(x)

5b. 1/sqrt(1+x^2)

6a. coth(x) -- typo in last term?

6b. (1+x)^r

7a. csc(x)

7b. tan(x)

8a. arcsin(x)

8b. sech(x)

9a. 1/((x-1)+1) = 1/x

9b. 1/(1+x^2)

10a. cot(x) -- typos in two of the terms?

10b. 1/(1-x)^2

11a. arctan(x)

11b. csch(x)

12a. sqrt(1+x^2)

12b. pi/2 - arctan(x) = arccot(x)

13a. ?

13b. ?

14a. log(1+x)

14b. arctanh(x)

15a. pi/2 - arcsin(x) = arccos(x)

15b. arcsinh(x)

16a. - pi/2 - arctan(1/x) = - pi/2 + arccot(x) = - pi - arctan(x)

16b. tan(x)

17a. cos(x)

17b. exp(x)

I did all of them but those in row 13.

Taylor Series Expansions of Trigonometric Functions

Special Numbers for Bernoulli and Euler numbers, needed for tangent, cotangent, secant, cosecant, and their hyperbolic variants.

WAB

Veteran Member

About eleven, sir.

lpetrich

Contributor

How fast do they converge? We can find that out by taking the ratio of each term to the previous one and then finding that ratio's asymptotic behavior.

For power series (1 + x)^r the ratio of term k to term (k-1) is a(k)/a(k-1) = (r+k-1)/k * x with asymptotic value x. That means that the series will converge for |x| < 1 and not otherwise -- 1 is the radius of convergence.

The logarithmic, inverse trigonometric, and inverse hyperbolic functions are all integrals of expressions that contain power series:

log(1+x) - 1/(1+x)

arcsin(x) - 1/sqrt(1-x^2)

arctan(x) - 1/(1+x^2)

arcsinh(x) - 1/sqrt(1+x^2)

arctanh(x) - 1/(1-x^2)

The integrands have radius of convergence 1, and these functions' series thus have radius of convergence 1.

A borderline case with finite value is log(2) = 1 - 1/2 + 1/3 - 1/4 + 1/5 - 1/6 + ...

If one groups each pair together: 1/(2k-1) - 1/(2k) = 1/(2k*(2k-1)) one gets a convergent series, though a slowly-converging one. One can test convergence by doing sum-to-integral, and this series converges, even though sum of 1/k does not.

The exponential function has a(k)/a(k-1) = x/k meaning that the series converges for all x. That is likewise true of the sine and cosine functions, and their hyperbolic counterparts.

The tangent, cotangent, and cosecant series use "Bernoulli numbers", while the secant series uses "Euler numbers". Their hyperbolic counterparts do likewise

The power-series coefficients have behavior

tan: 2^(4n) * B(2n) / (2n)! -- cot: 2^(2n) * B(2n) / (2n)! -- sec: E(2n) / (2n)! -- csc: 2^(2n) * B(2n) / (2n)!

all times x^(2n) * (relatively close to 1)

and ignoring signs.

Same for their hyperbolic counterparts.

n! ~ n^n * e^(-n) * sqrt(2*pi*n)

(2n)! ~ 2^(2n) * (n/e)^(2n) * (relatively close to 1)

|B(2n)] ~ (n/pi/e)^(2n) * (relatively close to 1)

|E(2n)| ~ 2^(4n)*(n/pi/e)^(2n) * (relatively close to 1)

tan, sec series: (2*x/pi)^(2n)

cot, csc series: (x/pi)^(2n)

Hyperbolic counterparts the same

So the tan and sec series have radius of convergence pi/2 and the cot and csc series after their first members have radius of convergence pi, and likewise for their hyperbolic counterparts.

Exponential function and

Exponential function and  Logarithm

Logarithm

Trigonometric functions and

Trigonometric functions and  Inverse trigonometric functions

Inverse trigonometric functions

Hyperbolic functions and

Hyperbolic functions and  Inverse hyperbolic functions

Inverse hyperbolic functions

Factorial and

Factorial and  Bernoulli number and

Bernoulli number and  Euler numbers

Euler numbers

For power series (1 + x)^r the ratio of term k to term (k-1) is a(k)/a(k-1) = (r+k-1)/k * x with asymptotic value x. That means that the series will converge for |x| < 1 and not otherwise -- 1 is the radius of convergence.

The logarithmic, inverse trigonometric, and inverse hyperbolic functions are all integrals of expressions that contain power series:

log(1+x) - 1/(1+x)

arcsin(x) - 1/sqrt(1-x^2)

arctan(x) - 1/(1+x^2)

arcsinh(x) - 1/sqrt(1+x^2)

arctanh(x) - 1/(1-x^2)

The integrands have radius of convergence 1, and these functions' series thus have radius of convergence 1.

A borderline case with finite value is log(2) = 1 - 1/2 + 1/3 - 1/4 + 1/5 - 1/6 + ...

If one groups each pair together: 1/(2k-1) - 1/(2k) = 1/(2k*(2k-1)) one gets a convergent series, though a slowly-converging one. One can test convergence by doing sum-to-integral, and this series converges, even though sum of 1/k does not.

The exponential function has a(k)/a(k-1) = x/k meaning that the series converges for all x. That is likewise true of the sine and cosine functions, and their hyperbolic counterparts.

The tangent, cotangent, and cosecant series use "Bernoulli numbers", while the secant series uses "Euler numbers". Their hyperbolic counterparts do likewise

The power-series coefficients have behavior

tan: 2^(4n) * B(2n) / (2n)! -- cot: 2^(2n) * B(2n) / (2n)! -- sec: E(2n) / (2n)! -- csc: 2^(2n) * B(2n) / (2n)!

all times x^(2n) * (relatively close to 1)

and ignoring signs.

Same for their hyperbolic counterparts.

n! ~ n^n * e^(-n) * sqrt(2*pi*n)

(2n)! ~ 2^(2n) * (n/e)^(2n) * (relatively close to 1)

|B(2n)] ~ (n/pi/e)^(2n) * (relatively close to 1)

|E(2n)| ~ 2^(4n)*(n/pi/e)^(2n) * (relatively close to 1)

tan, sec series: (2*x/pi)^(2n)

cot, csc series: (x/pi)^(2n)

Hyperbolic counterparts the same

So the tan and sec series have radius of convergence pi/2 and the cot and csc series after their first members have radius of convergence pi, and likewise for their hyperbolic counterparts.

lpetrich

Contributor

I found what the missing one is: line 13a is i*arcsech(x) and line 13b is a mixture of arcsech(x) and i*arcsech(x) terms.

steve_bank

Diabetic retinopathy and poor eyesight. Typos ...

Imagine working out tables of trig functions with nothing but paper, pencil, and your brain.I'll look at these series. The numbering is (row number) (column number: a,b)

1a. exp(x*log(a))

1b. sqrt(1+x)

2a. 2 * sqrt(x) * arctan(sqrt(x))

2b. 1/(1+x)^3

3a. - log(1+x)

3b. 1/sqrt(1+x)

4a. sin(x)

4b. sinh(x)

5a. sec(x)

5b. 1/sqrt(1+x^2)

6a. coth(x) -- typo in last term?

6b. (1+x)^r

7a. csc(x)

7b. tan(x)

8a. arcsin(x)

8b. sech(x)

9a. 1/((x-1)+1) = 1/x

9b. 1/(1+x^2)

10a. cot(x) -- typos in two of the terms?

10b. 1/(1-x)^2

11a. arctan(x)

11b. csch(x)

12a. sqrt(1+x^2)

12b. pi/2 - arctan(x) = arccot(x)

13a. ?

13b. ?

14a. log(1+x)

14b. arctanh(x)

15a. pi/2 - arcsin(x) = arccos(x)

15b. arcsinh(x)

16a. - pi/2 - arctan(1/x) = - pi/2 + arccot(x) = - pi - arctan(x)

16b. tan(x)

17a. cos(x)

17b. exp(x)

I did all of them but those in row 13.

Taylor Series Expansions of Trigonometric Functions

Special Numbers for Bernoulli and Euler numbers, needed for tangent, cotangent, secant, cosecant, and their hyperbolic variants.

lpetrich

Contributor

Imagine working out tables of trig functions with nothing but paper, pencil, and your brain.

Before that, a common way of doing multiplication was

sin(a)*sin(b) = (1/2) * ( cos(a-b) - cos(a+b) )

cos(a)*cos(b) = (1/2) * ( cos(a-b) + cos(a+b) )

sin(a)*cos(b) = (1/2) * ( sin(a+b) + sin(a-b) )

cos(a)*sin(b) = (1/2) * ( sin(a+b) - sin(a-b) )

JN had used natural logarithms, those to base e = 2.7182818... because they have a nice mathematical property:

log(1+x) = x - x^2/2 + x^3/3 - x^4/4 + ...

In 1617, Henry Briggs introduced a variant that is more convenient for calculation with numbers represented in base 10: common logarithms or base-10 ones. These are a scaling of natural logarithms:

log10(x) = log10(e) * log(x) = log(x) / log(10)

He created a table of these logs of integers from 1 to 1000, and he later calculated tables of trigonometric functions and their logarithms.

lpetrich

Contributor

Henry Briggs was one of the first to use a finite-difference method to calculate tables, essentially interpolation with several points as input. For n points one can construct a polynomial with degree (n-1).

Using this kind of method means that one only has to calculate a relatively small number of points, and then to interpolate between those points. One has to use more than two points for good results, but log and trig functions are smooth enough to enable interpolation to work very well.

Over 1819 - 1849, Charles Babbage tried to automate the interpolation part with his Difference engine but he does not seem to have been very successful. But Per Georg Scheutz and other contemporaries were more successful, succeeding in building working ones.

Difference engine but he does not seem to have been very successful. But Per Georg Scheutz and other contemporaries were more successful, succeeding in building working ones.

A general formula: Lagrange polynomial and Lagrange Interpolating Polynomial -- from Wolfram MathWorld

Lagrange polynomial and Lagrange Interpolating Polynomial -- from Wolfram MathWorld

But that has poor cancellation and one must redo the entire calculation for every interpolated value that one wants to find. There is an alternate formula that uses intermediate values that can be precalculated:

Newton polynomial and Newton's Divided Difference Interpolation Formula -- from Wolfram MathWorld

Newton polynomial and Newton's Divided Difference Interpolation Formula -- from Wolfram MathWorld

The intermediate values:

Divided differences and Divided Difference -- from Wolfram MathWorld

Divided differences and Divided Difference -- from Wolfram MathWorld

Much better cancellation, from taking differences.

Using this kind of method means that one only has to calculate a relatively small number of points, and then to interpolate between those points. One has to use more than two points for good results, but log and trig functions are smooth enough to enable interpolation to work very well.

Over 1819 - 1849, Charles Babbage tried to automate the interpolation part with his

A general formula:

But that has poor cancellation and one must redo the entire calculation for every interpolated value that one wants to find. There is an alternate formula that uses intermediate values that can be precalculated:

The intermediate values:

Much better cancellation, from taking differences.

steve_bank

Diabetic retinopathy and poor eyesight. Typos ...

Dd the experiment a ways back. Easy to do.

Create a sampled sine wave

In Python

n = 100

y = n*[0]

dx = 2*math.pi/(n+1) //0 to 2pi, one sine cycle

x = 0

for i in range :

:

... y = math.sin(x)

... x += dx

Plot it.

Use a 3 point or higher order Lagrange interpolation to fill in the points between the data points y, and compare to what they should be.

Create a sampled sine wave

In Python

n = 100

y = n*[0]

dx = 2*math.pi/(n+1) //0 to 2pi, one sine cycle

x = 0

for i in range

... y = math.sin(x)

... x += dx

Plot it.

Use a 3 point or higher order Lagrange interpolation to fill in the points between the data points y, and compare to what they should be.

lpetrich

Contributor

I went through the trouble checking some early trigonometric tables for errors:

Ptolemy calculated "chords": chord(x) = 2*sin(x/2) while the others calculated half-chords, what we call sines.

Sexagesimal = base 60. Ptolemy and Madhava allowed the highest sexagesimal digit to equal or exceed 60.

For the first three, I used age 50 as an estimated date of publication of their works.

Abramowitz and Stegun - a big fat book of mathematical formulas and tables. I remember liking it a lot in past decades.

Abramowitz and Stegun - a big fat book of mathematical formulas and tables. I remember liking it a lot in past decades.

Scanned pages with HTML table of contents: Abramowitz and Stegun - Page index

Big fat PDF file: Abramowitz and Stegun

These scans contain all the formulas and discussion, but none of the tables of values, though they do contain tables of parameters for finding some values.

Almagest - Claudius Ptolemy - ca. 150 CE - values 360 - step 15m - mult 60^3 - 3-digit sexagesimal

Almagest - Claudius Ptolemy - ca. 150 CE - values 360 - step 15m - mult 60^3 - 3-digit sexagesimal Āryabhaṭa's sine table - Aryabhata - ca. 526 CE - values 24 - step 3d 45m - mult 360*60/(2pi) - differences

Āryabhaṭa's sine table - Aryabhata - ca. 526 CE - values 24 - step 3d 45m - mult 360*60/(2pi) - differences Madhava's sine table - Wikipedia - Madhava - ca. 1390 CE - values 24 - step 3d 45m - mult 36*60^3/(2pi) - 3-digit sexagesimal

Madhava's sine table - Wikipedia - Madhava - ca. 1390 CE - values 24 - step 3d 45m - mult 36*60^3/(2pi) - 3-digit sexagesimal De revolutionibus orbium coelestium - Nicolaus Copernicus - 1543 CE - values 540 - step 10m - mult 10^5

De revolutionibus orbium coelestium - Nicolaus Copernicus - 1543 CE - values 540 - step 10m - mult 10^5

Ptolemy calculated "chords": chord(x) = 2*sin(x/2) while the others calculated half-chords, what we call sines.

Sexagesimal = base 60. Ptolemy and Madhava allowed the highest sexagesimal digit to equal or exceed 60.

For the first three, I used age 50 as an estimated date of publication of their works.

Scanned pages with HTML table of contents: Abramowitz and Stegun - Page index

Big fat PDF file: Abramowitz and Stegun

These scans contain all the formulas and discussion, but none of the tables of values, though they do contain tables of parameters for finding some values.

lpetrich

Contributor

I'm old enough to remember big log and trig tables in math books, but I wouldn't be surprised if they are now all gone.

Mathematical table and

Mathematical table and  Trigonometric tables and

Trigonometric tables and  Special functions like in

Special functions like in  Abramowitz and Stegun big fat book.

Abramowitz and Stegun big fat book.

Space–time tradeoff - lots of space for precalculated tables or lots of time to calculate individual values.

Space–time tradeoff - lots of space for precalculated tables or lots of time to calculate individual values.  Precomputation and

Precomputation and  Lookup table

Lookup table

I remember once discovering a trig table in a computer game engine. But its contents are calculated when the game engine starts.

I remember once discovering a trig table in a computer game engine. But its contents are calculated when the game engine starts.

steve_bank

Diabetic retinopathy and poor eyesight. Typos ...

You mean the old 8 bit processor games?

There was a time tradeoff between look up tables and interpolation versus an iterative calculation.

Or you may only need a fixed set of values. It depends on the accuracy requirement and speed.

I don't know what he C library uses for sin , but you can time it.

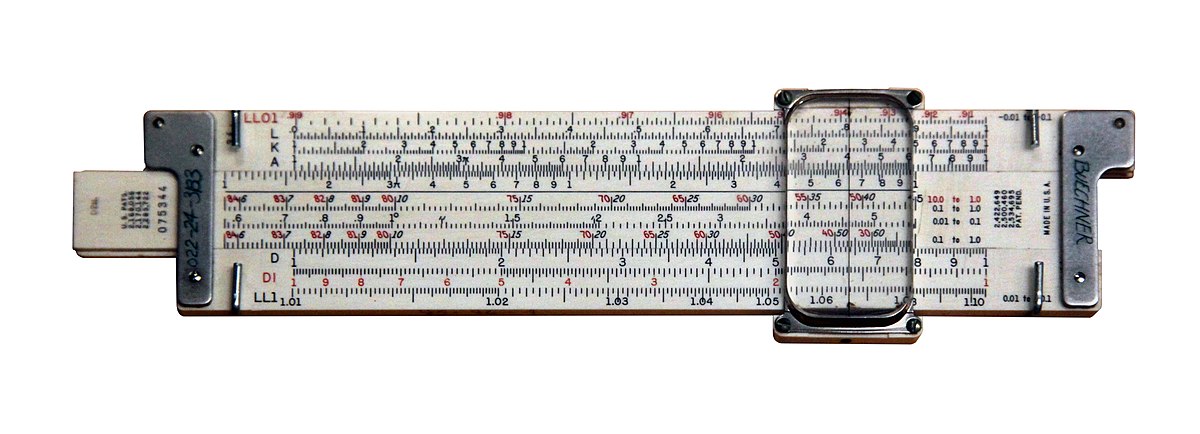

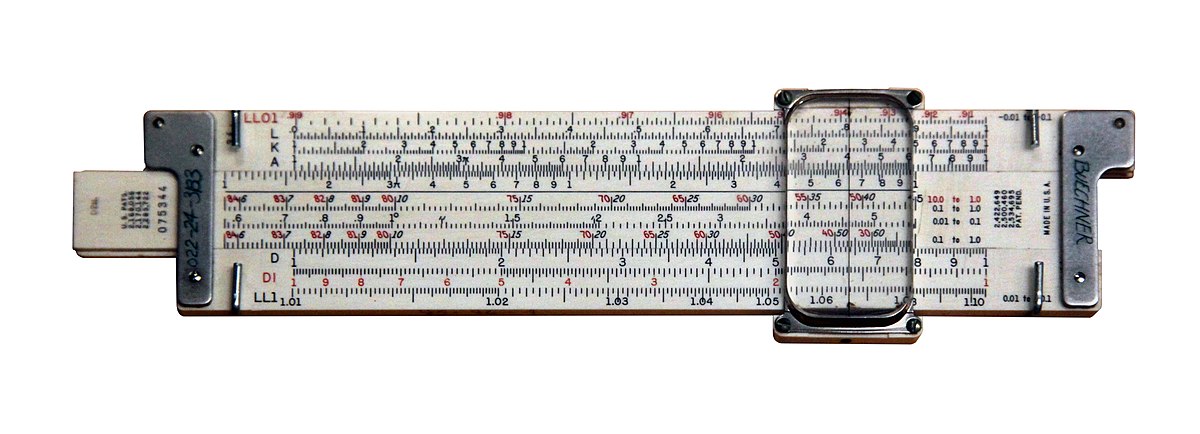

There are a number of approximations that can be found on the net. I remember the term 'slide rule accuracy'. Logs and trig functions.

en.wikipedia.org

en.wikipedia.org

The trusty Pickett metal slide rule. You carried trig tables in your shirt jacket with a small rule.

There was a time tradeoff between look up tables and interpolation versus an iterative calculation.

Or you may only need a fixed set of values. It depends on the accuracy requirement and speed.

I don't know what he C library uses for sin , but you can time it.

There are a number of approximations that can be found on the net. I remember the term 'slide rule accuracy'. Logs and trig functions.

Slide rule scale - Wikipedia

The trusty Pickett metal slide rule. You carried trig tables in your shirt jacket with a small rule.

lpetrich

Contributor

A Maclaurin series is a Taylor series that is expanded around 0.

Proof of the series form. Start with the Fundamental Theorem of Calculus:

\( \displaystyle{ f(x) = f(a) + \int_a^x f'(t) \,dt } \)

Integrate by parts:

\( \displaystyle{ f(x) = f(a) + x f'(x) - a f'(a) - \int_a^x t f''(t) \,dt } \)

Add and subtract

\( \displaystyle{ \int_a^x x f''(t) \,dt = x f'(x) - x f'(a) } \)

giving

\( \displaystyle{ f(x) = f(a) + (x - a) f'(a) + \int_a^x (x - t) f''(t) \,dt } \)

The two final terms are similar, so let us see if we can find a general form.

\( \displaystyle{ \int_a^x \frac{(x - t)^{n-1}}{(n-1)!} f^{(n)}(t) \,dt = \frac{(x - a)^n}{n!} f^{(n)}(a) + \int_a^x \frac{(x - t)^n}{n!} f^{(n+1)}(t) \,dt } \)

So by mathematical induction, for arbitrary n,

\( \displaystyle{ f(x) = \sum_{k=0}^n \frac{(x - a)^k}{k!} f^{(k)}(a) + \int_a^x \frac{(x - t)^n}{n!} f^{(n+1)}(t) \,dt } \)

The final term can give us a remainder term, a term that can be useful in establishing an upper limit for the rest of the series.

\( \displaystyle{ R_n = \frac{(x - a)^{n+1}}{(n+1)!} f^{(n+1)}(\xi) } \) with \( \xi \in \{a .. x\} \)

lpetrich

Contributor

A mid-1990's first-person shooter.You mean the old 8 bit processor games?

(from steve_bank's link) International Slide Rule Museum (ISRM) and I add

I remember slide rules from long ago. Their most typical mode of operation is doing multiplication by taking logs and adding.

Some slide rules also do powers (squares, cubes, reciprocals, square roots, etc.), logarithmic and exponential functions, trigonometric and hyperbolic functions and their inverses, and solutions of quadratic equations.